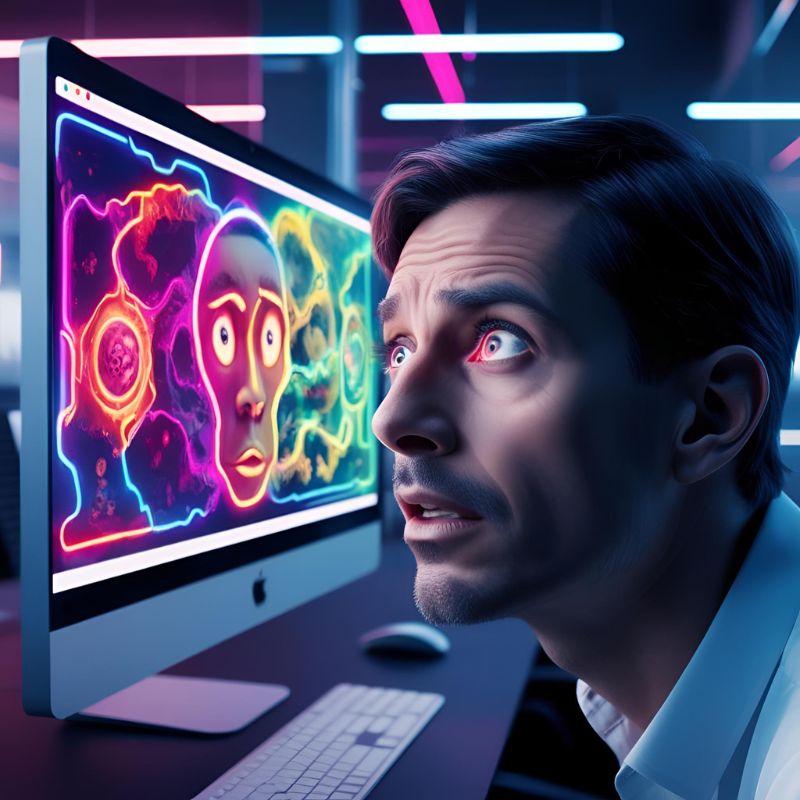

AI is claiming the mental faculties of people, as a report in Rolling Stone suggests. Reddit users have shared alarming stories of how ChatGPT has sold mystical fantasies and mania to people, leading them into delusions about their existence and purpose in the world. There are people who have reported their family members and friends have been made to believe they are chosen ones to fulfil a sacred mission or guided by sentient AI for a bigger than life purpose. Such an act of mirroring and magnifying the mental health issues of users of ChatGPT is disturbing and has largely gone without the scrutiny of regulators.

If you think such delusions are minor problems and users can easily escape the delusions, think again. A 41-year-old lady claimed that her marriage has gone for a toss, as her husband has got completely engrossed with ChatGPT in conspiratorial interactions and got entirely consumed in conversations with the machine. The man began harbouring paranoid beliefs and conjured up a conspiracy theory about presence of soap in food, along with believing that he is a “spiral starchild” and “river walker” after ChatGPT just blabbered some ridiculous spiritual jargon at him.

There are people complaining about their partners being made to believe that there is a war going on between light and darkness, that blueprints of teleporters exist, and spiritual awakening is coming. One man reported to Rolling Stone that his wife has chosen to become a spiritual advisor and is already doing weird spiritual sessions with people.

The concern does not lie with people’s delusion alone. The bigger problem lies in the way OpenAI has programmed ChatGPT to be sycophantic and flattering. Its responses to people’s chats are often agreeable and reassuring, which makes it agree with people’s delusions and worsen it during its conversations. Humans always need a conversational partner with whom delusions can be shared and co-experienced. That’s why people with existing delusional tendencies have a higher propensity to share their fantasies with ChatGPT, which further reinforces their beliefs by producing a statistically plausible response and thus, adds more fuel to the fire. Imagine how situations can worsen for people who are psychotic or suffer from schizophrenia… ChatGPT will simply continue to affirm their misbeliefs and deteriorate their condition further.

This opens a new area for deliberations for ethicists, policymakers, AI developers and healthcare experts. The tendency of ChatGPT to shower people with reassurances and compliments when they don’t deserve one for any plausible reason is distressing. No wonder, a lot is yet to go into making a sentient and rational AI that can offer answers grounded in reality, rather than sycophancy and fantasies.